Context Engineering

为什么 Context Engineering 决定 AI Agent 的稳定性与可控性

TL;DR(中文)

Context Engineering是让AI Agent稳定执行 的关键:它关注 system prompt、tool instructions、memory、约束与可观测性,而不只是写一个 “更好的 prompt”。- 常见失败不是模型不够强,而是上下文设计不清晰:silent skip、状态不一致、工具使用不当、错误处理缺失。

- 最有效的迭代方式是:

deploy → observe → refine → test,并把行为数据沉淀为可复用的规则与 rubric。

核心概念(中文讲解,术语保留英文)

Context Engineering 可以理解为:围绕 AI Agent 的输入上下文做系统化设计与迭代,让 agent 在复杂任务中更可靠、更可控。相比单次 LLM 调用的 “prompt engineering”,它更强调:

System prompt:定义角色、边界、允许/禁止 actions、输出格式Task constraints:完成标准、质量标准、跳过规则、重试规则Tool descriptions:什么时候用哪个 tool、参数怎么填、错误怎么处理Memory management:状态怎么保存、哪些内容需要长期保存、如何压缩上下文Error handling:失败后的 retry/fallback,以及何时需要 human-in-the-loop

How to Apply(中文)

你可以把 Context Engineering 当成一个工程闭环:

- 把目标写成可验收的 definition(what good looks like)

- 把执行过程写成可观测的 state machine(plan/status/log)

- 用 tools 让关键步骤可验证(尤其是事实性与外部写入)

- 把失败模式写进 system prompt(例如:不能 silent skip、必须记录 justification)

- 持续用真实任务回放与复盘(把“偶发 bug”变成明确规则)

Case Study:Deep Research Agent

下面两个问题非常典型,几乎所有 Deep Research Agent 都会遇到:

Issue 1:Incomplete Task Execution(中文解读)

现象:orchestrator 计划了 3 个 search tasks,但只执行了 2 个,第三个被 silent skip。

原因:system prompt 没有把 “任务完成规则” 写清楚,agent 会自行假设任务重复或不必要。

落地解法(二选一):

- Flexible:允许跳过,但必须写 justification,并更新状态

- Strict:所有计划内 tasks 必须执行完

(以下为示例规则,保留英文术语以便直接复用)

You are a deep research agent responsible for executing comprehensive research tasks.

TASK EXECUTION RULES:

- For each search task you create, you MUST either:

1. Execute a web search and document findings, OR

2. Explicitly state why the search is unnecessary and mark it as completed with justification

- Do NOT skip tasks silently or make assumptions about task redundancy

- If you determine tasks overlap, consolidate them BEFORE execution

- Update task status in the spreadsheet after each action

Issue 2:Lack of Debugging Visibility(中文解读)

现象:没有 log 与 state tracking,你很难知道 agent 为什么这么做,也就很难迭代。

落地解法:用一个简单的 task tracker(spreadsheet / file)强制可观测:

- Task ID

- Search query

- Status(

todo/in_progress/completed) - Results summary

- Timestamp

Self-check rubric(中文)

- 是否消除了 prompt ambiguity(指令是否可执行、可验证)?

- 是否把 required vs optional actions 写清楚?

- 是否具备 observability(log、state、tool call 回放)?

- 是否覆盖 error cases(失败重试、部分失败、需要人工介入的情况)?

- 是否能在相同输入下保持行为一致(behavioral consistency)?

Practice(中文)

练习:为一个 “weekly market research agent” 写一份 Context Engineering checklist。

- 必须有:plan 格式、status 值约束、tool 使用规则、失败重试规则、输出 schema。

- 额外加分:定义一个简单的

evaluation rubric(例如 10 分制:coverage / correctness / citations / actionability)。

References

Original (English)

Context engineering is a critical practice for building reliable and effective AI agents. This guide explores the importance of context engineering through a practical example of building a deep research agent.

Context engineering involves carefully crafting and refining the prompts, instructions, and constraints that guide an AI agent's behavior to achieve desired outcomes.

What is Context Engineering?

Context engineering is the process of designing, testing, and iterating on the contextual information provided to AI agents to shape their behavior and improve task performance. Unlike simple prompt engineering for single LLM calls, context engineering for agents involves (but not limited to):

- System prompts that define agent behavior and capabilities

- Task constraints that guide decision-making

- Tool descriptions that clarify when and how to use available functions/tools

- Memory management for tracking state across multiple steps

- Error handling patterns for robust execution

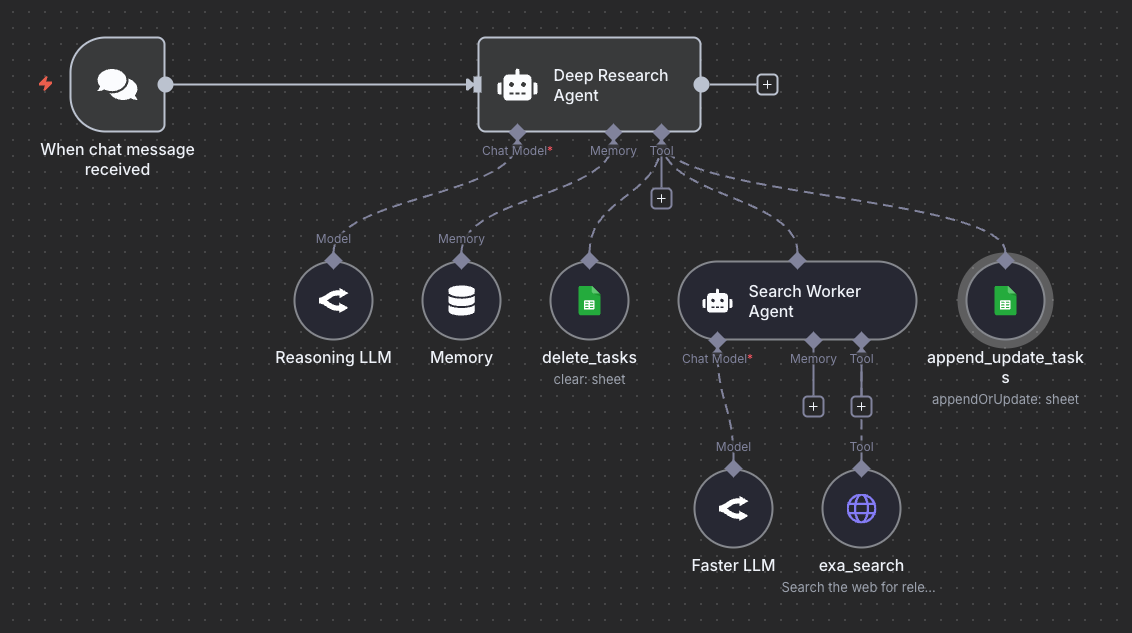

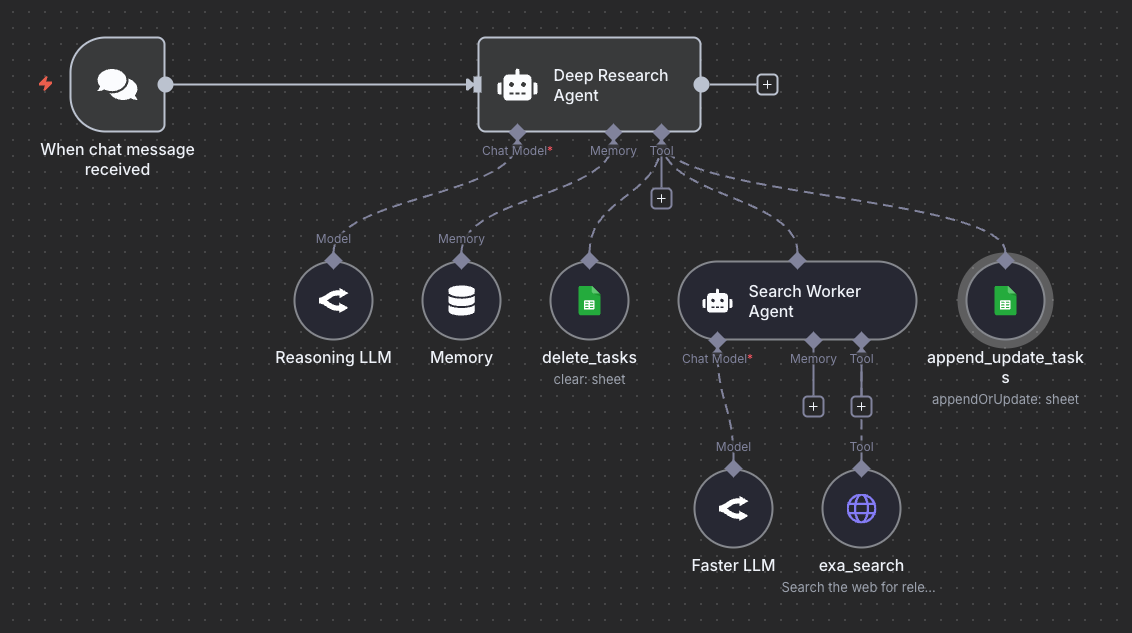

Building a Deep Research Agent: A Case Study

Let's explore context engineering principles through an example: a minimal deep research agent that performs web searches and generates reports.

The Context Engineering Challenge

When building the first version of this agent system, the initial implementation revealed several behavioral issues that required careful context engineering:

Issue 1: Incomplete Task Execution

Problem: When running the agentic workflow, the orchestrator agent often creates three search tasks but only executes searches for two of them, skipping the third task without explicit justification.

Root Cause: The agent's system prompt lacked explicit constraints about task completion requirements. The agent made assumptions about which searches were necessary, leading to inconsistent behavior.

Solution: Two approaches are possible:

- Flexible Approach (current): Allow the agent to decide which searches are necessary, but require explicit reasoning for skipped tasks

- Strict Approach: Add explicit constraints requiring search execution for all planned tasks

Example system prompt enhancement:

You are a deep research agent responsible for executing comprehensive research tasks.

TASK EXECUTION RULES:

- For each search task you create, you MUST either:

1. Execute a web search and document findings, OR

2. Explicitly state why the search is unnecessary and mark it as completed with justification

- Do NOT skip tasks silently or make assumptions about task redundancy

- If you determine tasks overlap, consolidate them BEFORE execution

- Update task status in the spreadsheet after each action

Issue 2: Lack of Debugging Visibility

Problem: Without proper logging and state tracking, it was difficult to understand why the agent made certain decisions.

Solution: For this example, it helps to implement a task management system using a spreadsheet or text file (for simplicity) with the following fields:

- Task ID

- Search query

- Status (todo, in_progress, completed)

- Results summary

- Timestamp

This visibility enables:

- Real-time debugging of agent decisions

- Understanding of task execution flow

- Identification of behavioral patterns

- Data for iterative improvements

Context Engineering Best Practices

Based on this case study, here are key principles for effective context engineering:

1. Eliminate Prompt Ambiguity

Bad Example:

Perform research on the given topic.

Good Example:

Perform research on the given topic by:

1. Breaking down the query into 3-5 specific search subtasks

2. Executing a web search for EACH subtask using the search_tool

3. Documenting findings for each search in the task tracker

4. Synthesizing all findings into a comprehensive report

2. Make Expectations Explicit

Don't assume the agent knows what you want. Be explicit about:

- Required vs. optional actions

- Quality standards

- Output formats

- Decision-making criteria

3. Implement Observability

Build debugging mechanisms into your agentic system:

- Log all agent decisions and reasoning

- Track state changes in external storage

- Record tool calls and their outcomes

- Capture errors and edge cases

⚠️ Warning: Pay close attention to every run of your agentic system. Strange behaviors and edge cases are opportunities to improve your context engineering efforts.

4. Iterate Based on Behavior

Context engineering is an iterative process:

- Deploy the agent with initial context

- Observe actual behavior in production

- Identify deviations from expected behavior

- Refine system prompts and constraints

- Test and validate improvements

- Repeat

5. Balance Flexibility and Constraints

Consider the tradeoff between:

- Strict constraints: More predictable but less adaptable

- Flexible guidelines: More adaptable but potentially inconsistent

Choose based on your use case requirements.

Advanced Context Engineering Techniques

Layered Context Architecture

Context engineering applies to all stages of the AI agent build process. Depending on the AI Agent, it's sometimes helpful to think of context as a hierarchical structure. For our basic agentic system, we can organize context into hierarchical layers:

- System Layer: Core agent identity and capabilities

- Task Layer: Specific instructions for the current task

- Tool Layer: Descriptions and usage guidelines for each tool

- Memory Layer: Relevant historical context and learnings

Dynamic Context Adjustment

Another approach is to dynamically adjust context based on the task complexity, available resources, previous execution history, and error patterns. Based on our example, we can adjust context based on:

- Task complexity

- Available resources

- Previous execution history

- Error patterns

Context Validation

Evaluation is key to ensuring context engineering techniques are working as they should for your AI agents. Before deployment, validate your context design:

- Completeness: Does it cover all important scenarios?

- Clarity: Is it unambiguous?

- Consistency: Do different parts align?

- Testability: Can you verify the behavior?

Common Context Engineering Pitfalls

Below are a few common context engineering pitfalls to avoid when building AI agents:

1. Over-Constraint

Problem: Too many rules make the agent inflexible and unable to handle edge cases.

Example:

NEVER skip a search task.

ALWAYS perform exactly 3 searches.

NEVER combine similar queries.

Better Approach:

Aim to perform searches for all planned tasks. If you determine that tasks are redundant, consolidate them before execution and document your reasoning.

2. Under-Specification

Problem: Vague instructions lead to unpredictable behavior.

Example:

Do some research and create a report.

Better Approach:

Execute research by:

1. Analyzing the user query to identify key information needs

2. Creating 3-5 specific search tasks covering different aspects

3. Executing searches using the search_tool for each task

4. Synthesizing findings into a structured report with sections for:

- Executive summary

- Key findings per search task

- Conclusions and insights

3. Ignoring Error Cases

Problem: Context doesn't specify behavior when things go wrong.

Solution: In some cases, it helps to add error handling instructions to your AI Agents:

ERROR HANDLING:

- If a search fails, retry once with a rephrased query

- If retry fails, document the failure and continue with remaining tasks

- If more than 50% of searches fail, alert the user and request guidance

- Never stop execution completely without user notification

Measuring Context Engineering Success

Track these metrics to evaluate context engineering effectiveness:

- Task Completion Rate: Percentage of tasks completed successfully

- Behavioral Consistency: Similarity of agent behavior across similar inputs

- Error Rate: Frequency of failures and unexpected behaviors

- User Satisfaction: Quality and usefulness of outputs

- Debugging Time: Time required to identify and fix issues

It's important to not treat context engineering as a one-time activity but an ongoing practice that requires:

- Systematic observation of agent behavior

- Careful analysis of failures and edge cases

- Iterative refinement of instructions and constraints

- Rigorous testing of changes

We will be covering these principles in more detail in upcoming guides. By applying these principles, you can build AI agent systems that are reliable, predictable, and effective at solving complex tasks.

ℹ️ Info: Learn how to build production-ready AI agents in our comprehensive course. Join now! Use code PROMPTING20 to get an extra 20% off.