Context Engineering Deep Dive

Context Engineering 深入实践:架构分工、tool definitions 与可观测性

TL;DR(中文)

Context Engineering的本质是把 agent 从“能跑”变成“稳定能跑”:system prompt、tool definitions、memory、分工、错误处理与可观测性缺一不可。- 当单个 agent 承担过多职责时,最常见的结果是 context length 失控、任务遗漏、状态不一致与 tool 使用混乱。

- 高性价比的升级路径:引入

Orchestrator & Sub-agent分工,让 search worker 独立上下文;让关键中间产物落到外部 memory(files/notes),并加verificationgate。

中文导读(术语保留英文)

这篇文章更偏工程实践:它通过一个 Deep Research Agent 的例子,解释为什么 Context Engineering 往往比“换更强的 LLM”更关键。阅读时建议关注 3 个主线问题:

- Architecture:哪些职责应该放在

orchestrator,哪些应该拆给 sub-agent? - Tooling:tool definitions 如何写才更“可用”?如何减少歧义与错误调用?

- Iteration:如何用真实行为数据迭代 system prompt、status 规范与失败恢复?

How to Apply(中文)

你可以用这套最小 checklist 把文中的思想迁移到自己的 agent 项目里:

- 定义

task tracker:明确状态枚举(例如todo/in_progress/done),并要求每次 action 后更新 - 设计

search worker:只接受 “search query 文本”,返回 “findings + sources + errors” - 约束 tool 使用:禁止伪造 tool output;tool failure 必须显式记录并 retry

- 输出结构化:关键结果用 schema(表格/JSON)固定下来,便于

evaluation - 加

verification:关键结论必须有 sources;不确定要标注assumptions

Practice(中文)

练习:把你自己的一个复杂任务(例如“竞品调研 + 报告 + 行动清单”)改写成一个 Deep Research Agent 的 spec:

- 写出

orchestrator与至少 1 个 sub-agent(如Search Worker)的职责与输入输出 - 写出 system prompt 的 “rules” 与 status 规范

- 写出一个

verificationrubric(coverage / correctness / citations / actionability)

References

Original (English)

Context engineering requires significant iteration and careful design decisions to build reliable AI agents. This guide takes a deep dive into the practical aspects of context engineering through the development of a basic deep research agent, exploring some of the techniques and design patterns that improve agent reliability and performance.

The Reality of Context Engineering

Building effective AI agents requires substantial tuning of system prompts and tool definitions. The process involves spending hours iterating on:

- System prompt design and refinement

- Tool definitions and usage instructions

- Agent architecture and communication patterns

- Input/output specifications between agents

Don't underestimate the effort required for context engineering. It's not a one-time task but an iterative process that significantly impacts agent reliability and performance.

Agent Architecture Design

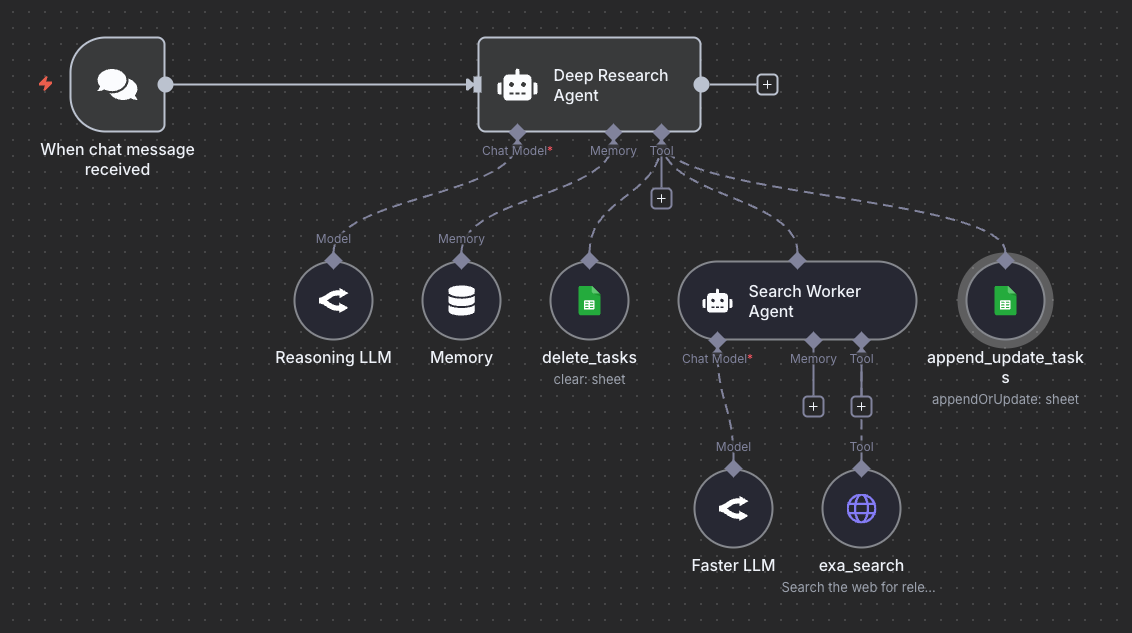

The Original Design Problem

Let's look at a basic deep research agent architecture. The initial architecture connects the web search tool directly to the deep research agent. This design places too much burden on a single agent responsible for:

- Managing tasks (creating, updating, deleting)

- Saving information to memory

- Executing web searches

- Generating final reports

Consequences of this design:

- Context grew too long

- Agent forgot to execute web searches

- Task completion updates were missed

- Unreliable behavior across different queries

The Improved Multi-Agent Architecture

The solution involved separating concerns by introducing a dedicated search worker agent:

Benefits of the multi-agent design:

- Separation of Concerns: The parent agent (Deep Research Agent) handles planning and orchestration, while the search worker agent focuses exclusively on executing web searches

- Improved Reliability: Each agent has a clear, focused responsibility, reducing the likelihood of missed tasks or forgotten operations

- Model Selection Flexibility: Different agents can use different language models optimized for their specific tasks

- Deep Research Agent: Uses Gemini 2.5 Pro for complex planning and reasoning

- Search Worker Agent: Uses Gemini 2.5 Flash for faster, more cost-effective search execution

If you are using models from other providers like OpenAI, you can leverage GPT-5 (for planning and reasoning) and GPT-5-mini (for search execution) for similar performance.

ℹ️ Info: Design Principle: Separating agent responsibilities improves reliability and enables cost-effective model selection for different subtasks.

System Prompt Engineering

Here is the full system prompt for the deep research agent we built in n8n:

You are a deep research agent who will help with planning and executing search tasks to generate a deep research report.

## GENERAL INSTRUCTIONS

The user will provide a query, and you will convert that query into a search plan with multiple search tasks (3 web searches). You will execute each search task and maintain the status of those searches in a spreadsheet.

You will then generate a final deep research report for the user.

For context, today's date is: {{ $now.format('yyyy-MM-dd') }}

## TOOL DESCRIPTIONS

Below are some useful instructions for how to use the available tools.

Deleting tasks: Use the delete_task tool to clear up all the tasks before starting the search plan.

Planning tasks: You will create a plan with the search tasks (3 web searches) and add them to the Google Sheet using the append_update_task tool. Make sure to keep the status of each task updated after completing each search. Each task begins with a todo status and will be updated to a "done" status once the search worker returns information regarding the search task.

Executing tasks: Use the Search Worker Agent tool to execute the search plan. The input to the agent are the actual search queries, word for word.

Use the tools in the order that makes the most sense to you but be efficient.

Let's break it down into parts and discuss why each section is important:

High-Level Agent Definition

The system prompt begins with a clear definition of the agent's role:

You are a deep research agent who will help with planning and executing search tasks to generate a deep research report.

General Instructions

Provide explicit instructions about the agent's workflow:

## GENERAL INSTRUCTIONS

The user will provide a query, and you will convert that query into a search plan with multiple search tasks (3 web searches). You will execute each search task and maintain the status of those searches in a spreadsheet.

You will then generate a final deep research report for the user.

Providing Essential Context

Current Date Information:

Including the current date is crucial for research agents to get up-to-date information:

For context, today's date is: {{ $now.format('yyyy-MM-dd') }}

Why this matters:

- LLMs typically have knowledge cutoffs months or years behind the current date

- Without current date context, agents often search for outdated information

- This ensures agents understand temporal context for queries like "latest news" or "recent developments"

In n8n, you can dynamically inject the current date using built-in functions with customizable formats (date only, date with time, specific timezones, etc.).

Tool Definitions and Usage Instructions

The Importance of Detailed Tool Descriptions

Tool definitions typically appear in two places:

- In the system prompt: Detailed explanations of what tools do and when to use them

- In the actual tool implementation: Technical specifications and parameters

⚠️ Warning: Key Insight: The biggest performance improvements often come from clearly explaining tool usage in the system prompt, not just defining tool parameters.

Example Tool Instructions

The system prompt also includes detailed instructions for using the available tools:

## TOOL DESCRIPTIONS

Below are some useful instructions for how to use the available tools.

Deleting tasks: Use the delete_task tool to clear up all the tasks before starting the search plan.

Planning tasks: You will create a plan with the search tasks (3 web searches) and add them to the Google Sheet using the append_update_task tool. Make sure to keep the status of each task updated after completing each search. Each task begins with a todo status and will be updated to a "done" status once the search worker returns information regarding the search task.

Executing tasks: Use the Search Worker Agent tool to execute the search plan. The input to the agent are the actual search queries, word for word.

Use the tools in the order that makes the most sense to you but be efficient.

Initially, without explicit status definitions, the agent would use different status values across runs:

- Sometimes "pending", sometimes "to-do"

- Sometimes "completed", sometimes "done", sometimes "finished"

Be explicit about allowed values. This eliminates ambiguity and ensures consistent behavior.

Note that the system prompt also includes this instruction:

Use the tools in the order that makes most sense to you, but be efficient.

What's the reasoning behind this decision?

This provides flexibility for the agent to optimize its execution strategy. During testing, the agent might:

- Execute only 2 searches instead of 3 if it determines that's sufficient

- Combine redundant search queries

- Skip searches that overlap significantly

Here is a specific instruction you can use, if you require all search tasks to be executed:

You MUST execute a web search for each and every search task you create.

Do NOT skip any tasks, even if they seem redundant.

When to use flexible vs. rigid approaches:

- Flexible: During development and testing to observe agent decision-making patterns

- Rigid: In production when consistency and completeness are critical

Context Engineering Iteration Process

The Iterative Nature of Improving Context

Context engineering is not a one-time effort. The development process involves:

- Initial implementation with basic system prompts

- Testing with diverse queries

- Identifying issues (missed tasks, wrong status values, incomplete searches)

- Adding specific instructions to address each issue

- Re-testing to validate improvements

- Repeating the cycle

What's Still Missing

Even after multiple iterations, there are opportunities for further improvement:

Search Task Metadata:

- Augmenting search queries

- Search type (web search, news search, academic search, PDF search)

- Time period filters (today, last week, past month, past year, all time)

- Domain focus (technology, science, health, etc.)

- Priority levels for task execution order

Enhanced Search Planning:

- More detailed instructions on how to generate search tasks

- Preferred formats for search queries

- Guidelines for breaking down complex queries

- Examples of good vs. bad search task decomposition

Date Range Specification:

- Start date and end date for time-bounded searches

- Format specifications for date parameters

- Logic for inferring date ranges from time period keywords

Based on the recommended improvements, it's easy to appreciate that web search for AI agents is a challenging effort that requires a lot of context engineering.

Advanced Considerations

Sub-Agent Communication

When designing multi-agent systems, carefully consider:

What information does the sub-agent need?

- For the search worker: Just the search query text

- Not the full context or task metadata

- Keep sub-agent inputs minimal and focused

What information should the sub-agent return?

- Search results and relevant findings

- Error states or failure conditions

- Metadata about the search execution

Context Length Management

As agents execute multiple tasks, context grows:

- Task history accumulates

- Search results add tokens

- Conversation history expands

Strategies to manage context length:

- Use separate agents to isolate context

- Implement memory management tools

- Summarize long outputs before adding to context

- Clear task lists between research queries

Error Handling in System Prompts

Include instructions for failure scenarios:

ERROR HANDLING:

- If search_worker fails, retry once with rephrased query

- If task cannot be completed, mark status as "failed" with reason

- If critical errors occur, notify user and request guidance

- Never proceed silently when operations fail

Conclusion

Context engineering is a critical practice for building reliable AI agents that requires:

- Significant iteration time spent tuning prompts and tool definitions

- Careful architectural decisions about agent separation and communication

- Explicit instructions that eliminate assumptions

- Continuous refinement based on observed behavior

- Balance between flexibility and control

The deep research agent example demonstrates how thoughtful context engineering transforms an unreliable prototype into a robust, production-ready system. By applying these principles—clear role definitions, explicit tool instructions, essential context provision, and iterative improvement—you can build AI agents that consistently deliver high-quality results.