Mixtral 8x22B

Mixtral 8x22B overview

TL;DR(中文)

Mixtral 8x22B是更大规模的MoE模型(总参数更大、active parameters 更小比例),目标是更好的能力/成本比。- 常见卖点:长 context window、multilingual、math reasoning、code generation、以及 native function calling / constrained outputs。

- 落地建议:重点用你的任务验证长文档 recall 与 format stability(JSON/结构化输出),并建立回归

evaluation。

中文导读(术语保留英文)

如果你在做工程选型,这页建议你重点看:

- context window(64K)对长文档/多轮任务的价值

function calling与 constrained output 能否稳定工作- 在你业务数据上的 hallucination/事实性表现(建议接

RAG)

Original (English)

Mixtral 8x22B is a new open large language model (LLM) released by Mistral AI. Mixtral 8x22B is characterized as a sparse mixture-of-experts model with 39B active parameters out of a total of 141B parameters.

Capabilities

Mixtral 8x22B is trained to be a cost-efficient model with capabilities that include multilingual understanding, math reasoning, code generation, native function calling support, and constrained output support. The model supports a context window size of 64K tokens which enables high-performing information recall on large documents.

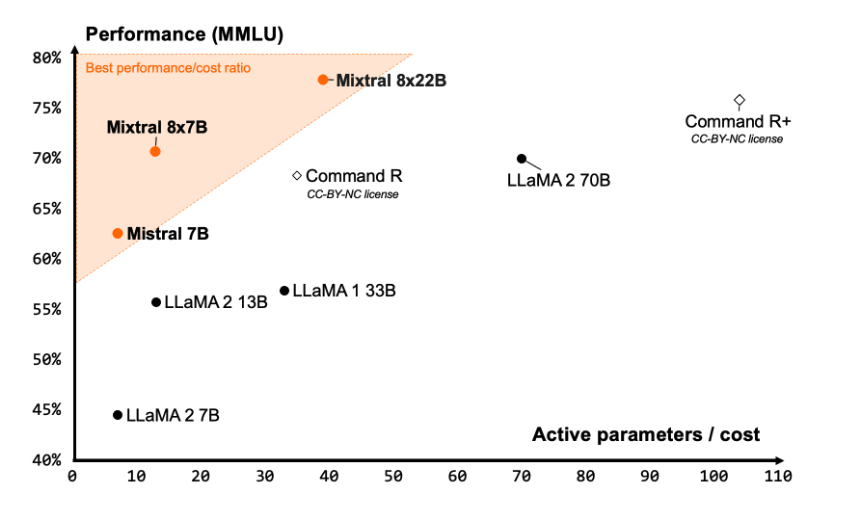

Mistral AI claims that Mixtral 8x22B delivers one of the best performance-to-cost ratio community models and it is significantly fast due to its sparse activations.

Source: Mistral AI Blog

Source: Mistral AI Blog

Results

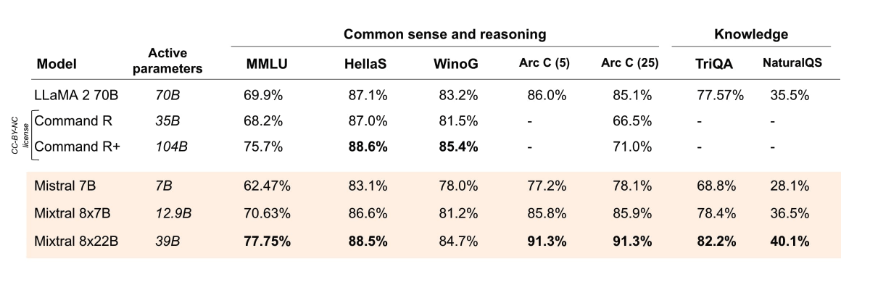

According to the official reported results, Mixtral 8x22B (with 39B active parameters) outperforms state-of-the-art open models like Command R+ and Llama 2 70B on several reasoning and knowledge benchmarks like MMLU, HellaS, TriQA, NaturalQA, among others.

Source: Mistral AI Blog

Source: Mistral AI Blog

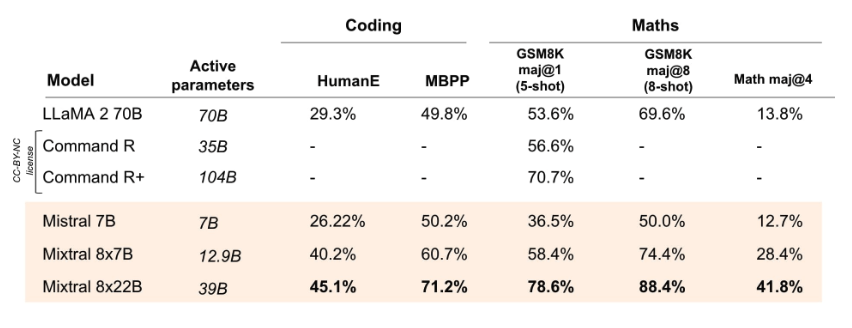

Mixtral 8x22B outperforms all open models on coding and math tasks when evaluated on benchmarks such as GSM8K, HumanEval, and Math. It's reported that Mixtral 8x22B Instruct achieves a score of 90% on GSM8K (maj@8).

Source: Mistral AI Blog

Source: Mistral AI Blog

More information on Mixtral 8x22B and how to use it here: https://docs.mistral.ai/getting-started/open_weight_models/#operation/listModels

The model is released under an Apache 2.0 license.