OLMo

OLMo overview

TL;DR(中文)

OLMo是 Allen Institute of AI 推出的 open language model 方向:强调 open data、open training code、open evaluation,目标是提升LLM研究的可复现性与透明度。- 适合学习:data curation、训练与评估流程(尤其适用于研究/教育场景)。

- 工程落地建议:用你的任务做

evaluation再决定是否采用(或作为对照模型)。

中文导读(术语保留英文)

这页的价值不只在 benchmark,而在 “开放到什么程度”。阅读时建议关注:

- 数据与数据管线(例如

Dolma) - 训练与中间产物(weights/checkpoints/logs)

- 评估工具与可复现性(例如

OLMo-Eval、Catwalk)

Original (English)

In this guide, we provide an overview of the Open Language Mode (OLMo), including prompts and usage examples. The guide also includes tips, applications, limitations, papers, and additional reading materials related to OLMo.

Introduction to OLMo

The Allen Institute of AI has released a new open language model and framework called OLMo. This effort is meant to provide full access to data, training code, models, evaluation code so as to accelerate the study of language models collectively.

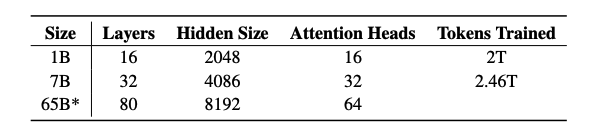

Their first release includes four variants at the 7B parameter scale and one model at the 1B scale, all trained on at least 2T tokens. This marks the first of many releases which also includes an upcoming 65B OLMo model.

The releases includes:

- full training data, including the code that produces the data

- full models weights, training code, logs, metrics, and inference code

- several checkpoints per model

- evaluation code

- fine-tuning code

All the code, weights, and intermediate checkpoints are released under the Apache 2.0 License.

OLMo-7B

Both the OLMo-7B and OLMo-1B models adopt a decoder-only transformer architecture. It follows improvements from other models like PaLM and Llama:

- no biases

- a non-parametric layer norm

- SwiGLU activation function

- Rotary positional embeddings (RoPE)

- a vocabulary of 50,280

Dolma Dataset

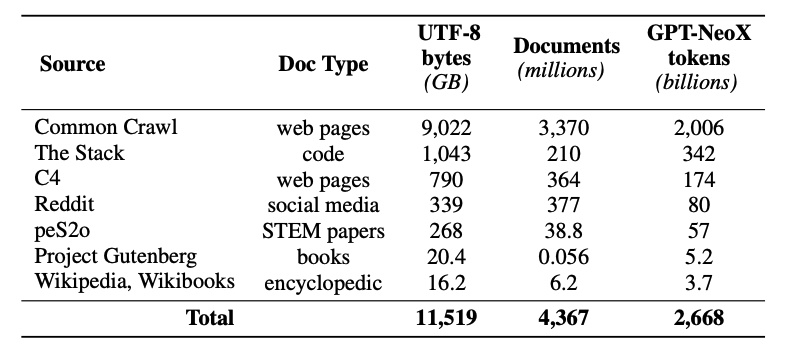

This release also includes the release a pre-training dataset called Dolma -- a diverse, multi-source corpus of 3 trillion token across 5B documents acquired from 7 different data sources. The creation of Dolma involves steps like language filtering, quality filtering, content filtering, deduplication, multi-source mixing, and tokenization.

The training dataset includes a 2T-token sample from Dolma. The tokens are concatenated together after appending a special EOS token to the end of each document. The training instances include groups of consecutive chunks of 2048 tokens, which are also shuffled.

More training details and hardware specifications to train the models can be found in the paper.

Results

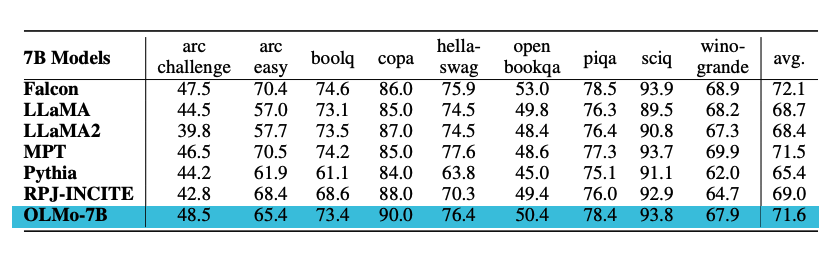

The models are evaluated on downstream tasks using the Catwalk. The OLMo models are compared to other several publicly available models like Falcon and Llama 2. Specifically, the model is evaluated on a set of tasks that aim to measure the model's commonsense reasoning abilities. The downstream evaluation suite includes datasets like piqa and hellaswag. The authors perform zero-shot evaluation using rank classification (i.e., completions are ranked by likelihood) and accuracy is reported. OLMo-7B outperforms all other models on 2 end-tasks and remains top-3 on 8/9 end-tasks. See a summary of the results in the chart below.

Prompting Guide for OLMo

下面给出一个最小可复用的 prompting 模板(保持 code block 全英文,你可以直接复制改变量):

You are a helpful assistant.

Task: <describe the task>

Constraints:

- Follow the requested output format exactly.

- If you are unsure, say \"Unsure\" and list what information is missing.

- Do not fabricate citations or facts.

Output format:

<define a strict JSON schema or a bullet list template>

Input:

<paste the user input or context here>

常见迭代方向:

- 如果输出容易跑格式:把

Output format写得更严格(字段名、枚举值、例子) - 如果容易 hallucination:要求输出

Evidence(从 context 复制片段)或接RAG - 如果任务复杂:先让模型输出 plan,再执行(plan → draft → verify)

Figures source: OLMo: Accelerating the Science of Language Models