Grok-1

Grok-1 overview

TL;DR(中文)

Grok-1是 xAI 发布的 open-weightMoE模型之一(base model checkpoint),更偏研究/对照用途,而不是开箱即用的 chat agent。- 你可以把它当成一个“基础模型样本”:需要额外的 instruction tuning / alignment 才更适合对话与应用落地。

- 如果要用于工程,建议先做:任务级

evaluation、安全策略(guardrails)、以及必要的 fine-tuning 或 prompt constraints。

中文导读(术语保留英文)

阅读这页建议关注:

- MoE 的 inference 特性(active weights 比例)

- pretraining cutoff(对知识时效的影响)

- benchmark 结果的定位(更适合对比,不代表你的任务一定表现一致)

Original (English)

Grok-1 is a mixture-of-experts (MoE) large language model (LLM) with 314B parameters which includes the open release of the base model weights and network architecture.

Grok-1 is trained by xAI and consists of MoE model that activates 25% of the weights for a given token at inference time. The pretraining cutoff date for Grok-1 is October 2023.

As stated in the official announcement, Grok-1 is the raw base model checkpoint from the pre-training phase which means that it has not been fine-tuned for any specific application like conversational agents.

The model has been released under the Apache 2.0 license.

Results and Capabilities

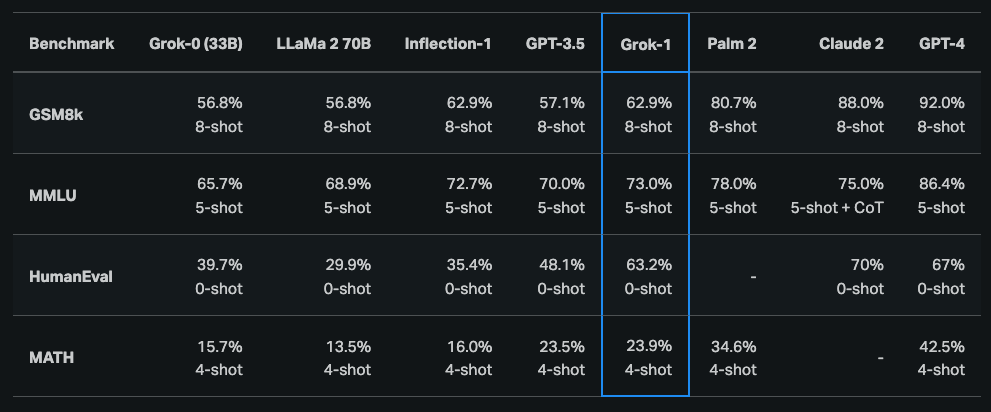

According to the initial announcement, Grok-1 demonstrated strong capabilities across reasoning and coding tasks. The last publicly available results show that Grok-1 achieves 63.2% on the HumanEval coding task and 73% on MMLU. It generally outperforms ChatGPT-3.5 and Inflection-1 but still falls behind improved models like GPT-4.

Grok-1 was also reported to score a C (59%) compared to a B (68%) from GPT-4 on the Hungarian national high school finals in mathematics.

Check out the model here: https://github.com/xai-org/grok-1

Due to the size of Grok-1 (314B parameters), xAI recommends a multi-GPU machine to test the model.