Deep Agents

Deep Agents 的设计要点:Planning、分工、检索、验证与迭代

TL;DR(中文)

- 很多

AI Agent在遇到长链路、多步骤任务时会变得 “shallow”:计划断裂、上下文爆炸、工具调用混乱、最终输出不可验证。 - “Deep Agents” 强调 5 个关键能力:

Planning、Orchestrator & Sub-agent架构、Context Retrieval、Context Engineering、Verification。 - 把

AI Agent做深,往往不是“堆更多 prompts”,而是系统工程:分工、记忆、观测、评估、以及可恢复的执行路径。

核心概念(中文讲解,术语保留英文)

“Deep Agents” 可以理解为:针对复杂任务的 agentic system,它不仅能生成答案,还能 长期维护 plan、把任务拆给不同的 sub-agent、把中间产物写入外部 memory,并通过 verification 让输出更可靠。

一个常见的落地形态是:

- 一个

orchestrator负责总计划与调度 - 多个 sub-agent 专注于特定子任务(search、coding、analysis、verification、writing)

- 外部 memory(files / notes / vector store / database)保存中间结果与关键事实

How to Apply(中文)

如果你想把一个 “shallow agent” 往 “deep agent” 方向升级,可以先做这几件事:

- 显式计划:让

orchestrator输出结构化 plan,并要求每一步更新状态(避免 silent skip)。 - 分离上下文:sub-agent 只拿到它需要的最小上下文(separation of concerns),降低 context length 风险。

- 外部记忆:把中间产物写入外部(例如

notes/,reports/,facts.json),让后续步骤引用“文件/记录”,而不是靠对话滚动。 - verification gate:关键结论必须过

LLM-as-a-Judge或 human review;不确定就明确标注不确定性。 - 可观测性:记录每次 tool call、每次 plan 更新、每次失败与重试原因,便于迭代

Context Engineering。

Self-check rubric(中文)

Planning:是否有可更新 plan?是否能 retry/recover?是否记录跳过原因?Orchestrator & Sub-agent:是否职责清晰?sub-agent 输入是否最小化?是否能合并输出?Context Retrieval:是否把关键事实写入外部 memory?检索策略是否可控(hybrid / semantic / keyword)?Context Engineering:system prompt、tool descriptions、output schema 是否足够明确?Verification:是否有系统性evaluation?是否能拦截hallucination与prompt injection影响?

Practice(中文)

练习:设计一个 “Deep Research Agent” 的最小架构(不写代码也可以)。

- sub-agent 至少包含:

Search Worker、Analyst、Verifier。 - 说明每个 sub-agent 的输入/输出、会调用哪些 tools、产物存在哪些外部文件里。

- 设计一个

verification流程:什么情况下必须重新搜索?什么情况下必须人工确认?

References

- LangChain: Deep Agents (Labs)

- Anthropic: Writing tools for agents

- Anthropic: Building agents with the Claude Agent SDK

- PromptingGuide: Context Engineering Guide

Original (English)

Most agents today are shallow.

They easily break down on long, multi-step problems (e.g., deep research or agentic coding).

That’s changing fast!

We’re entering the era of "Deep Agents", systems that strategically plan, remember, and delegate intelligently for solving very complex problems.

We at the DAIR.AI Academy and other folks from LangChain, Claude Code, as well as more recently, individuals like Philipp Schmid, have been documenting this idea.

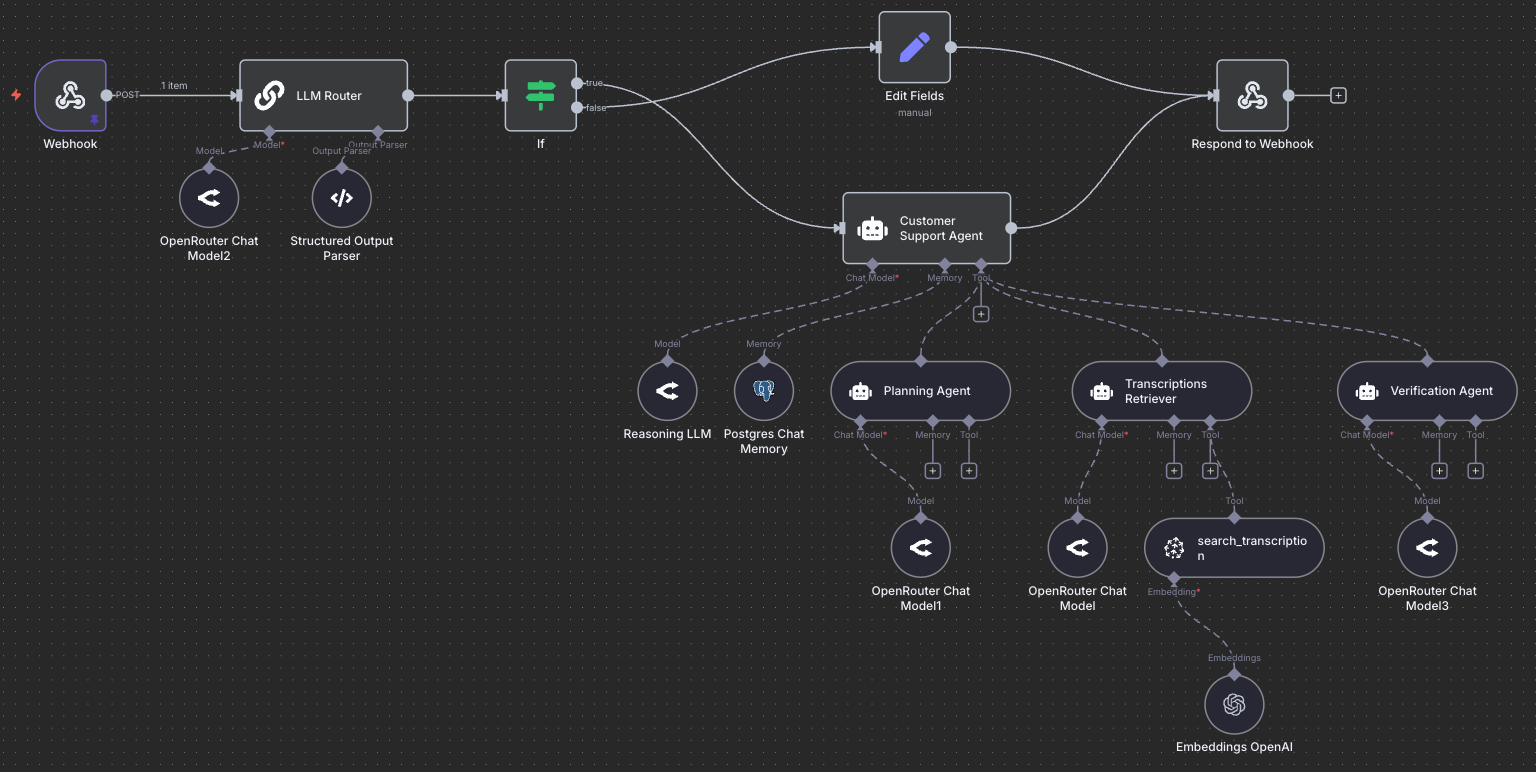

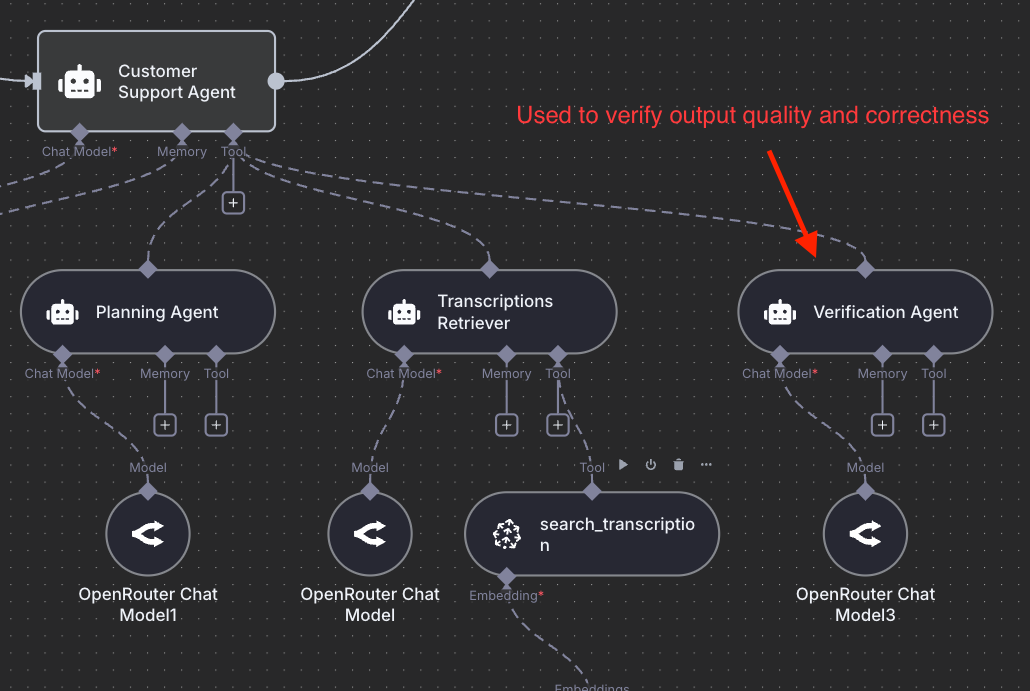

Here is an example of a deep agent built to power the DAIR.AI Academy's customer support system intended for students to ask questions regarding our trainings and courses:

Here’s roughly the core idea behind Deep Agents (based on my own thoughts and notes that I've gathered from others):

Planning

Instead of reasoning ad-hoc inside a single context window, Deep Agents maintain structured task plans they can update, retry, and recover from. Think of it as a living to-do list that guides the agent toward its long-term goal. To experience this, just try out Claude Code or Codex for planning; the results are significantly better once you enable it before executing any task.

We have also written recently on the power of brainstorming for longer with Claude Code, and this shows the power of planning, expert context, and human-in-the-loop (your expertise gives you an important edge when working with deep agents). Planning will also be critical for long-horizon problems (think agents for scientific discovery, which comes next).

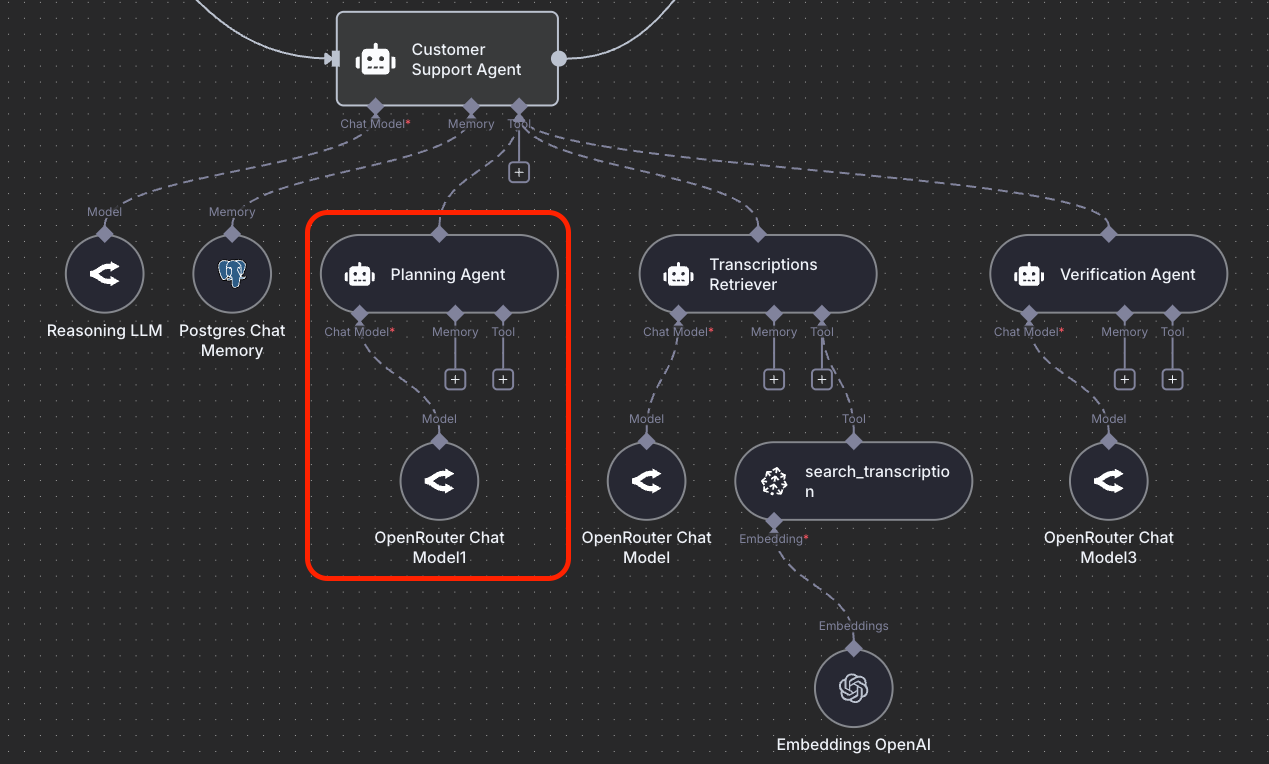

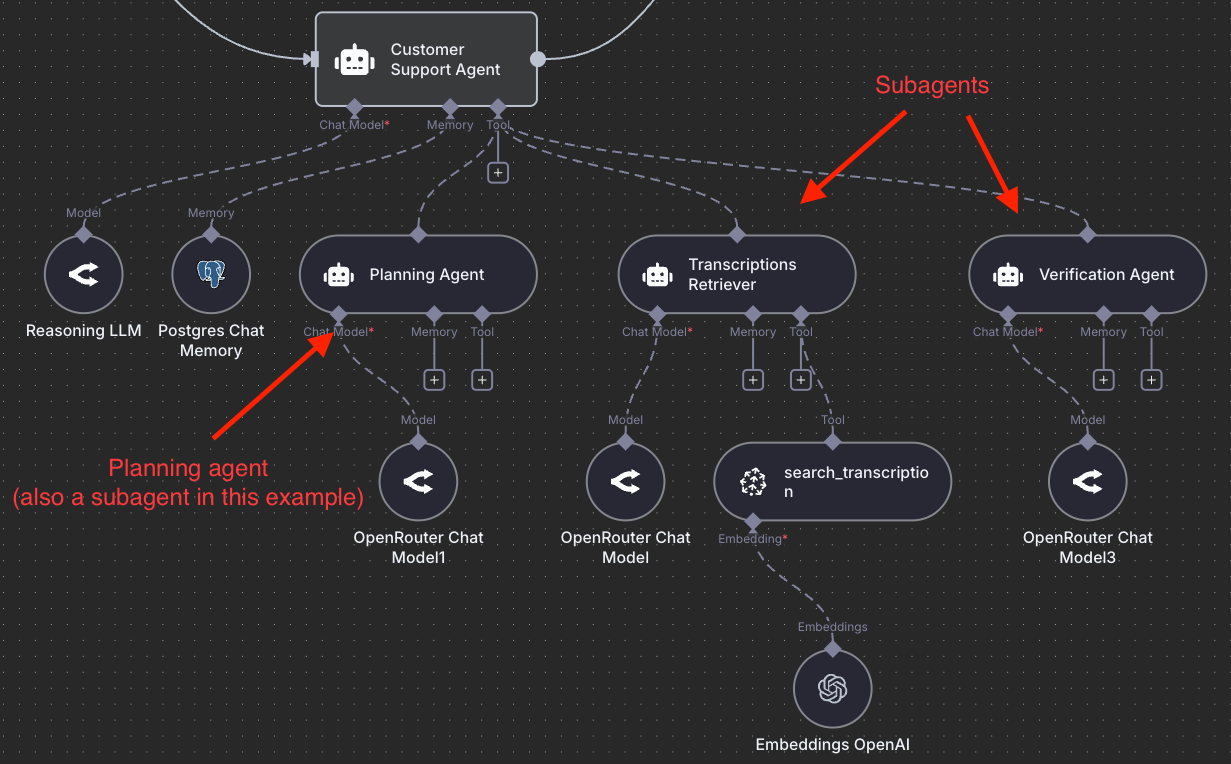

Orchestrator & Sub-agent Architecture

One big agent (typically with a very long context) is no longer enough. I've seen arguments against multi-agent systems and in favor of monolithic systems, but I'm skeptical about this.

The orchestrator-sub-agent architecture is one of the most powerful LLM-based agentic architectures you can leverage today for any domain you can imagine. An orchestrator manages specialized sub-agents such as search agents, coders, KB retrievers, analysts, verifiers, and writers, each with its own clean context and domain focus.

The orchestrator delegates intelligently, and subagents execute efficiently. The orchestrator integrates their outputs into a coherent result. Claude Code popularized the use of this approach for coding and sub-agents, which, it turns out, are particularly useful for efficiently managing context (through separation of concerns).

I wrote a few notes on the power of using orchestrator and subagents here and here.

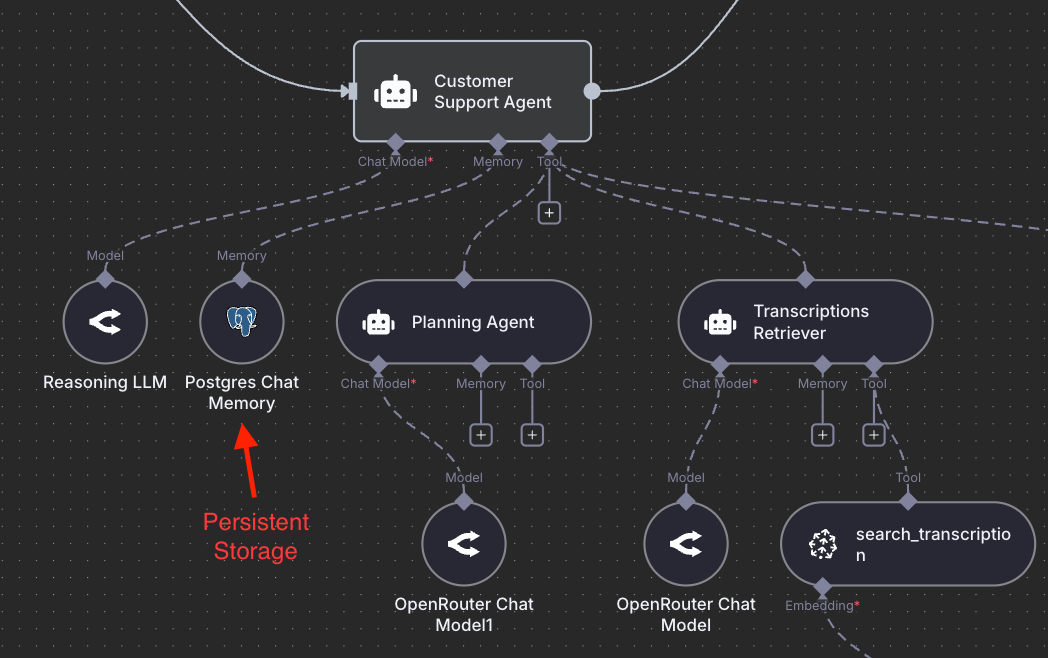

Context Retrieval and Agentic Search

Deep Agents don’t rely on conversation history alone. They store intermediate work in external memory like files, notes, vectors, or databases, letting them reference what matters without overloading the model’s context. High-quality structured memory is a thing of beauty.

Take a look at recent works like ReasoningBank and Agentic Context Engineering for some really cool ideas on how to better optimize memory building and retrieval. Building with the orchestrator-subagents architecture means that you can also leverage hybrid memory techniques (e.g., agentic search + semantic search), and you can let the agent decide what strategy to use.

Context Engineering

One of the worst things you can do when interacting with these types of agents is underspecified instructions/prompts. Prompt engineering was and is important, but we will use the new term context engineering to emphasize the importance of building context for agents. The instructions need to be more explicit, detailed, and intentional to define when to plan, when to use a sub-agent, how to name files, and how to collaborate with humans. Part of context engineering also involves efforts around structured outputs, system prompt optimization, compacting context, evaluating context effectiveness, and optimizing tool definitions.

Read our previous guide on context engineering to learn more: Context Engineering Deep Dive

Verification

Next to context engineering, verification is one of the most important components of an agentic system (though less often discussed). Verification boils down to verifying outputs, which can be automated (LLM-as-a-Judge) or done by a human. Because of the effectiveness of modern LLMs at generating text (in domains like math and coding), it's easy to forget that they still suffer from hallucination, sycophancy, prompt injection, and a number of other issues. Verification helps with making your agents more reliable and more production-ready. You can build good verifiers by leveraging systematic evaluation pipelines.

Final Words

This is a huge shift in how we build with AI agents. Deep agents also feel like an important building block for what comes next: personalized proactive agents that can act on our behalf. I will write more on proactive agents in a future post.

The figures you see in the post describe an agentic RAG system that students need to build for the course final project.